Math for Non-Geeks/ Derivatives

{{#invoke:Math for Non-Geeks/Seite|oben}} The derivative is one of the central concepts within calculus. For a given function , the derivative is another function which specifies the rate of change of in . It is used in various scientific disciplines, basically everywhere, where there is a "rate of change" within a dynamical system. Knowing about derivatives means having a powerful tool at hand: it allows you to describe and predict rates of change in a huge variety of applications.

Intuitions of the derivative

Te derivative is a mathematical object, which becomes useful in many situations. Depending on the situation, there are several intuitions which can make this abstract object come alive in your mind:

- Derivative as instantaneous rate of change: The derivative corresponds to what we intuitively understand as the rate of change of a function at some instant . A rate of change () describes how much a quantity changes () in relation to the change of some reference quantity (). If we let () run to 0, we get the rate of change within an "infinitely small amount of time". An example are speeds: Consider a given time-dependent position , i.e. the function is re-labeld as and is re-labelled as . The quotient of "travelled distance" and "elapsed time" just describes the "average speed". In order to get the speed at some time , we make the time difference smaller and smaller, such that the "average speed" goes over to an "instantaneous speed" . This is called first derivative and mathematicians write .

- Derivative as tangent slope: The derivative corresponds to the slope that the tangent of the graph has at the location of the derivative. Thus the derivative solves the geometric problem of determining the tangent to a graph by a point.

- Derivative as slope of the locally best linear approximation: Any function that has a derivative a point can be well approximated by a linear function in an environment around this point. The derivative corresponds to the slope of this linear function. This is useful if the function is hard to compute: the linear approximation can be computed way easier in many cases.

- Derivative as generalised slope: How steep is a given function? At first, the concept of the "slope of a function" is only defined for linear functions. But we can use the derivative to define the "slope" also for non-linear functions.

We will discuss these intuitions in detail in the following and use them to derive a formal definition of the derivative. We will also see that derivable functions are "kink-free", which is why they are also called smooth functions (think of smoothly bending some dough or tissue).

Derivative as rate of change

Introduction to the derivative

The derivative corresponds to the rate of change of a function . How can this rate of change of a function be determined or defined? Let, for example be a real-valued function, which has the following graph:

For example, may describe a physical quantity in relation to another quantity. For example, could correspond to the distance covered by an object at the time . could also be the air pressure at the altitude or the population size of a species at the time . Now let us take the argument , where the function has the function value :

Let us assume that is the distance travelled by a car at the time . Then the current rate of change of at the position is equal to the velocity of the car at the time .

It is hard to determine the velocity directly with only given. But we can estimate it. We take a point in time shortly after and look at the average speed in that time . The distance travelled in that time is , while the time difference is . Thus the car has the average speed

This quotient, which indicates the average rate of change of the function in the interval , is called difference quotient. As its name suggests, it is a quotient of two differences. In the following figure we see that this difference quotient is equal to the slope of the secant passing through the points and :

This average speed is a good approximation of the current speed of our car at the time . It is only an approximation since the movement of the car between and need not be uniform - it can accelerate or decelerate. But we should get a better result if we shorten the period for calculating the average speed. So let's look at a time which is even closer to and determine the average speed for the new time interval between and :

We can shorten the time difference even further by taking a sequence of times which converge towards . For every we calculate the average speed of the car in the period from to . The shorter , the less the car should be able to accelerate or decelerate in this period of time. So the average speed should converge to the current speed of the car at time :

Thus we have found a method to determine the current rate of change of at time : We take any sequence of arguments , which are all different from and for which . For every we determine the quotient . The current rate of change is the limit of these quotients:

The derivative or at is denoted as . So we have the mathematical definition:

The limit of the difference quotient is sometimes also called differential quotient.

Negative time intervals

What happens if we do not choose in the future, but in the past of ? Let us draw this situation in a picture:

The average speed in the interval from to is then equal to . If we extend this fraction by a factor of , we get

We get the same term as in the previous section. This gives the average speed, no matter if or . Thus, in the case of a negative time interval with the average speed should also be close to the current speed of the car at the time , if is only sufficiently close to . There is

where is any sequence of different from with . The sequence elements of can sometimes be larger and sometimes smaller than depending on the index :

Refining the definition

Let now be a real-valued function and let . As we have seen above, there is

where is a sequence of arguments different from which converges to . In order to have at least one such sequence of arguments, must be an accumulation point of the domain (an element is an accumulation point of a set exactly when there is a sequence not including that number but converging towards it). This may sound more complicated than it often is. In most cases is an interval and then every is an accumulation point of . For the definition of the differential quotient it should not matter which sequence we choose. Accordingly, we can define the derivative:

We can shorten this definition by using limits for functions. As a reminder: There is according to definition: if and only if for all sequences of arguments non-equal to with . So:

The h-method

There is an equivalent option to define the derivative. For this we go from the differential quotient and perform the substitution . The new variable just describes the difference between and the point where the difference quotient is formed. For , equivalently goes . So we can also define the derivative as follows

Applications in science and technology

We have come to know the derivative as the current rate of change of a quantity. As such, it occurs frequently in science or applications. Several variables are defined as rates of change, for example:

- velocity: The velocity is the instantaneous rate of change of the distance travelled by an object.

- Acceleration: The acceleration is the instantaneous rate of change of the speed of an object.

- Pressure change: Let the air pressure at altitude . The derivative is the rate of change of air pressure with altitude. This example shows that the rate of change need not always be related to time. It can also be the rate of change with respect to another quantity, e.g. altitude.

- Chemical reaction rate: Let's consider a chemical reaction . Let the concentration of the substance at time . The derivative is the instantaneous rate of change of the concentration of and thus indicates how much of the substance is converted into the substance . Thus indicates the chemical reaction rate for the reaction .

- Often the number of individuals in a population is considered (for example the number of people on the planet, the number of bacteria in a Petri dish, the number of animals of a species or the number of atoms of a radioactive substance). The derivative represents the instantaneous rate of change of individuals at the time .

Definitions

Derivative and differentiability

Math for Non-Geeks: Template:Definition

Difference quotient and differential quotient

The terms "difference quotient" and "differential quotient" are mathematically defined as follows:

Derivative function

If a function with is differentiable at every point within its domain of definition, then has a derivative at every point in . The function that assigns its derivative to every m argument is called derivative function of :

Math for Non-Geeks: Template:Definition

Math for Non-Geeks: Template:Warnung

Notations

Historically, different notations have been developed to represent the derivative of a function. In this article we have only learned about the notation for the derivative of . It goes back to the mathematician Joseph-Louis Lagrange , who introduced it in 1797. Within this notation the second derivative of is denoted and the -th derivative is denoted .

Isaac Newton - (the founder of differential calculus besides Leibniz) - denoted the first derivative of with , accordingly he denoted the second derivative by . Nowadays this notation is mainly used in physics for the derivative with respect to time.

Gottfried Wilhelm Leibniz introduced for the first derivative of with respect to the variable the notation . This notation is read as "d f over d x of x". The second derivative is then denoted and the -th derivative is written as .

The notation of Leibniz is mathematically speaking not a fraction! The symbols and are called differentials, but in modern calculus (apart from the theory of so-called "differential forms") they have only a symbolic meaning. They are only allowed in this notation as formal differential quotients. Now there are applications of derivatives (like the "chain rule" or "integration by substitution"), in which the differentials or can be handled as if they were ordinary variables and in which one can come to correct solutions. But since there are no differentials in modern calculus, such calculations are not mathematically correct.

The notation or for the first derivative of dates back to Leonhard Euler. In this notation, the second derivative is written as or and the -th derivative as or .

Overview about notations

| Notation of the … | 1st derivative | 2nd derivative | -th derivative |

|---|---|---|---|

| Lagrange | |||

| Newton | |||

| Leibniz | |||

| Euler |

Derivative as tangential slope

The derivative corresponds to the limit value . The difference quotient is the slope of the secant between the points and . In the case of the boundary value formation , this secant merges into the tangent that touches the graph of at the point :

Damit ist die derivative gleich der Steigung der Tangente am Graphen durch den Punkt . Die derivative kann also genutzt werden, um die Tangente an einem Graphen zu bestimmen. Somit löst sie auch ein geometrisches Problem. Mit kennen wir die Steigung der Tangente and with einen Punkt auf der Tangente. Damit können wir die functionsgleichung dieser Tangente bestimmen.

Thus the derivative is equal to the slope of the tangent to the graph through the point . we may also use the derivative to compute the tangent to a graph. With we know the slope of the tangent. The offset can be determined using that is a point on the tangent. The following question illustrates how this works:

Math for Non-Geeks: Template:Frage

Derivative as characterization of best approximations

Approximating a differentiable function

The derivative can be used to approximate a function. One may even define the derivative as the "best linear approximation" to a function. To find this approximation we start with the definition of the derivative as a limit:

The difference quotient gets arbitrarily close to the derivative , if gets sufficiently close to . For we can write:

In the following we assume, that the expression for " is approximately as large as " is well defined and obeys the common arithmetic laws for equations. So we can change this equation to

If is sufficiently close to , then is approximately equal to . This value can thus be used as an approximation of near the derivative position. The function with the assignment rule is a linear function, since is an arbitrary but fixed point.

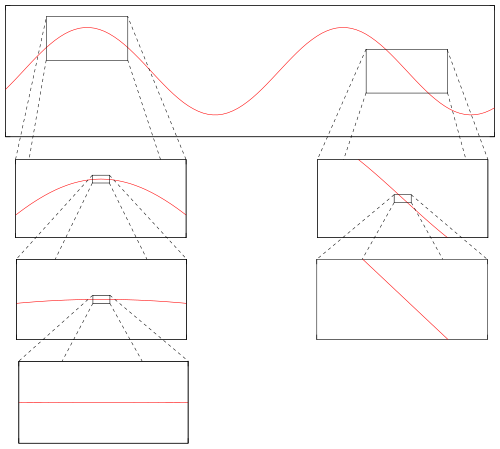

The assignment rule describes the tangent, which touches the graph of the function at the position where the derivative is taken. Thus, the tangent near the point of contact is a good approximation of the graph. This is also shown in the following diagram. If one zooms in close enough at a point in a differential function, the graph looks approximately like a straight line:

This line is described by the assignment rule and corresponds to the tangent of the graph at this position.

Example: The sine for small angles

Let's take a look at the above mentioned example. For this we consider the sine function . Its graph is

As we shall see, the derivative of the sine is the cosine and thus

the linear approximation of the sine is hence

In the vicinity of zero, there is . This is the so called small-angle approximation. Thus, can be approximated by . With this approximation is also quite good. The following diagram shows that near zero, the sine function can be described approximately by a line :

The diagram also shows that this approximation is only good near the derivative point. For values far away from zero, differs greatly from . The approximation is therefore only meaningful for small arguments!

Quality of approximations

How good is the approximation ? To answer this, let be the value with

The value is therefore the difference between the difference quotient and the derivative . This difference disappears in the limit , because for this limit the difference quotient turns into a differential quotient, i.e. the derivative . There is also . Now we can rearrange the above equation and get

The error between and is thus equal to the term . Because of there is

So the error disappears for . But we can say even more: decreases faster than a linear term towards zero. Even if we divide by and thus greatly increase this term near , then disappears for . There is

The error in the approximation thus falls off to zero faster than linear for . Let us summarize the previous argumentation in one theorem:

Math for Non-Geeks: Template:Satz

Alternative definition of the derivative

The fact that differentiable functions can be approximated by linear functions characterises the derivative. Every function is differentiable at the position , if a real number (best approximation parameter) as well as a function exist, such that that and apply. Its derivative is then . There is

So we can also define the derivative as follows:

Math for Non-Geeks: Template:Definition

Describing derivatives using a continuous function

There is a further characterisation of derivative. We start with the formula

Where is the difference between the difference quotient and the derivative (which disappears for ). If we rearrange this formula we get:

The function for has the property

Thus can be extended to a function which is continuous at the position , whereby the function value is set . This representation of a differentiable function allows a further characterisation of continuous functions:

Math for Non-Geeks: Template:Satz

Derivative as generalized slope

The slope is initially only defined for linear functions with the assignment rule where . For such functions the slope is equal to the value and can be calculated using the difference quotient. For two different arguments and from the domain of definition there is:

Now is also the derivative of at every accumulation point of the domain of definition:

The derivative of a linear function is therefore always equal to its slope. But the derivative is more general: it is defined for all differentiable functions. (Remember: A term is a generalisation of another term , if is the same as in all cases where is defined and can be applied to other cases.)

So we can consider the derivative as the slope of a function at a point. The transition slope derivative thus changes from a global property (the slope for linear functions is defined for the whole function), to a local property (the derivative is the instantaneous rate of change of a function).

Examples

Example of a differentiable function

Math for Non-Geeks: Template:Beispiel

Example of a non-differentiable function

Math for Non-Geeks: Template:Beispiel

Left-hand and right-hand derivative

Definition

The derivative of a function is the limit of the difference quotient for . The difference quotient can be understood as a function , which is defined for all except for . So is actually the limit value of a function.

The terms "Left-hand and right-hand derivative" can also be considered for the difference quotient. Thus we obtain the terms "left-hand" and "right-hand" derivative. For the left-hand derivative, only secants to the left of the considered point are evaluated. So only difference quotients are considered, where . Then it is checked whether the difference quotient converges to a number in the limit converge against a number. If the answer is yes, then this number is the left-hand derivative at that point:

Here is the notation for the left-hand derivative of at the position . For this limit to make sense, there must be at least one sequence of arguments that converges from the left towards . So has to be an accumulation point of the set .

Math for Non-Geeks: Template:Definition

Analogously, the right-hand derivative can be defined as follows:

Math for Non-Geeks: Template:Definition

functions only have a limit value at one position in their domain of definition if both the left-hand and the right-hand limit value exist at this position and both limit values match. We can apply this theorem directly to derivative functions:

Example

We have already shown that the absolute value function is not differentiable at . However, we can still show that the right-hand derivative exists at this position and is equal to :

Analogously, we can show that the left-hand derivative is equal to at this position:

Since the right-hand and left-hand derivatives do not coincide, the absolute value function cannot be differentiated at . At this point, it has left-hand and right-hand derivatives, but no general derivative.

Weil die rechtsseitige and die linksseitige derivative nicht übereinstimmen, ist die Betragsfunktion an der Stelle nicht ableitbar. Sie besitzt dort zwar links- and rechtsseitige derivativeen, aber keine derivative.

Differentiable functions do not have kinks

In the above example we have seen that the absolute value function is not differentiable. This is because the absolute value function "has a kink" at the position , so that the left-hand and right-hand derivative are different. If we go to from the left-hand side, the derivative is equal to , while the derivative from the right-hand side is equal to . The kink in the absolute value function thus prevents differentiability.

So if a function has a kink, it is not differentiable at this point. In other words: differentiable functions are kink-free. Therefore they are also called smooth functions (actually, smooth means "infinitely many times differentiable"). This does not mean, however, that kink-free functions are automatically differentiable. As an example, let us consider the sign function with the definition

Its graph is

This function is not differentiable at the zero point , because near the the "jump" of the function, the difference quotient converges towards infinity. For the right-hand derivative there is for example:

The sign function has no kink at the zero point. Instead, it makes a "jump" there.

At the example of the sign function we see that being "free of kinks" and "differentiable" cannot be the same. However, freedom from kinks is a prerequisite for differentiability. So differentiable functions are free of kinks.

Relations between differentiability, continuity and continuous differentiability

Continuous differentiability of a function implies its differentiability, which in turn implies its continuity. The converse statements do not hold, as we will see in the course of this section:

The first implication follows directly from the definition: A function is called continuously differentiable if it is differentiable and the derivative function is continuous. Thus, continuously differentiable functions are also differentiable. The second implication needs some more work:

Differentiable functions are continuous

We now show that every at one point differentiable function is also continuous at this point. Thus, differentiability is a stronger condition for a function than continuity:

Math for Non-Geeks: Template:Satz

Application: Non-continuous functions are not differentiable

From the previous section we know that every differentiable function is continuous:

Applying the principle of contraposition to this implication, we also get:

Example: Non-continuous functions are not differentiable

Take, as an for example the sign function

It is not continuous at . So it is also not differentiable there. We can prove non-continuity by taking a sequence . This sequence converges towards zero. If the sign function was differentiable, then the limit value would have to exist. However

The limit value does not exist in . Therefore the sign function is - as expected - not differentiable at .

Not every differentiable function is continuously differentiable

In the following example, we already use some derivatives rules, which will be discussed in more detail in the next chapter. Perhaps you already know them from school. If not, they are a useful insight to what will follow.

Math for Non-Geeks: Template:Beispiel

Exercises

Hyperbolic function

Math for Non-Geeks: Template:Aufgabe

Root function

Math for Non-Geeks: Template:Aufgabe

Determining limits

Math for Non-Geeks: Template:Gruppenaufgabe

Criterion for differentiability

Math for Non-Geeks: Template:Aufgabe

{{#invoke:Math for Non-Geeks/Seite|unten|quellen=

- Abschnitt Notationen des Wikipedia-Artikels „Differentialrechnungen“, abgerufen am 7. August 2016. Autorinnen and Autoren dieses Abschnitts sind unter anderem Benutzer:Christian1985, Benutzer:Roomsixhu, Benutzer:FranzR, Benutzer:DerSpezialist and Benutzer:SaltzGurke. Dieser Abschnitt steht unter einer CC-BY-SA 3.0 Lizenz.

- Abschnitt Example des Wikipedia-Artikels Derivative, abgerufen am 14.08.2016. Dieser Abschnitt steht unter einer CC-BY-SA 3.0 Lizenz.

- Greefrath, G., Oldenburg, R., Siller, H. S., Ulm, V., & Weigand, H. G. (2016). Aspects and "Grundvorstellungen" of the Concepts of Derivative and Integral. Journal für Mathematik-Didaktik, 37(1), 99-129.

}}